A previous blog discussed how to leverage Azure’s Application Insights for Log Monitoring. This blog will talk about another technique – how ML can be leveraged to build real-time insights.

Technology advancement has resulted in a rapid change in the IT environment over the last couple of decades. Modern systems, including infrastructure and applications, are expanding exponentially to meet the current demands. On one end, they are scaling out to multiple distributed systems, and on the other, they are scaling up to high-performance computing with heaps of data centers.

Furthermore, with customer centricity being the focus, modern systems are becoming core for supporting different services like social media platforms, search engines, and building various decision-making systems. Thus, the availability of information at everyone’s disposal, 24 hours, seven days a week across the globe, becomes paramount. The system’s availability and reliability become critical KPIs to be measured for a successful IT Team to enable the same. Any disruptions, like service or system outages or bad QoS, will break down the application, resulting in dissatisfied customers, higher churn rate, and substantial revenue loss. Therefore, constant monitoring of these systems has become a norm nowadays.

Pre-empting any abnormal system behaviors on time has become essential to prevent disruptions. Traditionally, when the log data was less, it was manually inspected using keyword search or rule matching. However, with this modern large-scale distributed system, the volume of logs has exploded hugely, rendering manual inspection infeasible. Hence, Machine Learning (ML) for log analysis has been getting a lot of traction recently.

Machine Learning for log detection

Organizations are increasingly looking to adopt Machine Learning for log analysis to understand, detect, and predict undesired data incidents. For instance, any large finance organization would have approximately 10,000+ apps at different maturity levels in their environment, which presents an opportunity for an ML-based intelligent log analysis solution. These apps have logs and events aggregated in OS logs, Network NMS logs, Machine Data, APM, Netcool, BMC capacity, or Splunk. However, considering the logs are vast and unstructured, it is difficult for an ML model to deduce if a specific logline is unique rather than just a different example of an event it has seen before.

It becomes important to feed the ML models with the right set of valuable information to analyze and raise a flag appropriately. Hence, anomaly detection (outlier analysis) becomes a critical data analysis procedure. Before we discuss Anomaly detection and understand how ML models can be utilized, here’s a quick overview of how the logs are procured, read, and fed to the model.

Log analysis for anomaly detection consists of three main stages :

- Log Collection: how do you collect the logs from different sources

- Log Parsing: how do you read the log message to store them appropriately

- Feature extraction: how do you extract valuable information that can be fed into ML models and then determine anomaly detection

Anomaly Detection

Anomaly Detection is a data mining technique that tries to identify unique events that can raise apprehensions by being statistically different from the rest of the datasets. Anomaly detection can be done using various ML concepts. However, with ML models, the outcomes would have a higher accuracy when there is more data available for the model to learn and predict. However, for logs, it might pose a couple of challenges. First is the availability of necessary data, which includes weeks and months of data to make the right predictions. With any changes in the application’s behavior, the model must re-learn the logs again and make appropriate predictions. Based on the amount and type of data involved and the ML concepts employed, anomaly detection can be leveraged from supervised and unsupervised methods.

- Supervised methods: Needs labeled training data with clear distinction on what is a regular occurrence and what is not. Then classification techniques are utilized to study a model to best use the discrimination among the instances.

- Unsupervised methods: Labels are not required. The models will try to work out the patterns and correlation of the data by themselves, which then are used to serve predictions

Multiple anomaly detection methods are available in both categories. For instance, supervised methods like Decision Tree, Logistic Regression, Random Forest, and SVM can be utilized. For example, SVM categorizes the probability of an incident associated with specific words in a logline. Familiar words such as “unsuccessful “or “error” would be associated with an incident and receive a higher probability score than other words. The collective score of the Log message is used to detect an issue. However, as mentioned earlier, supervised methods would require lots of data to predict accurately, and application behavior keeps changing, which makes deploying them in real-time environments difficult.

Unlike supervised methods, unsupervised learning’s training data is unlabelled, making it more conducive for a real-time production environment. Typical unsupervised approaches include clustering methods, PCA, and association rule mining.

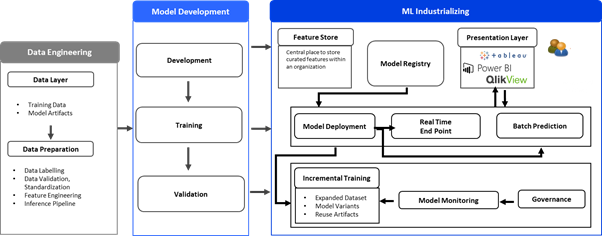

Figure 1: Data Science/ML Sandbox Architecture

Recently, organizations have also started using deep learning to detect anomalies in logs. A recent popular Neural network is Long Short-Term Memory (LSTM). The neural network model is utilized to model a system log as a natural language sequence. This enables studying log patterns and detecting anomalies when there is a deviation from the trained model. In addition, workflows are also constructed under the underlying system log so that developers can analyze the anomaly and perform the necessary RCA for it.

The challenge with this approach is that, apart from the large volume of data required to train, Deep Learning can be very compute-intensive. Although GPU instances are available to train the models, they come at a high cost. Also, third-party services like Nginx and MySQL are available to train deep learning algorithms. Still, these services concentrate on known environments on which they are being run, and any custom software which belongs to the landscape are sometimes ignored/missed from monitoring

Potential use cases of real-time Log analysis

- Security Compliance: Storing sensitive data is very critical in Banking and Healthcare Industry. Advanced analytics and regex can be leveraged to flag PII and PHI data stored elsewhere, apart from the destination location. Hence, identification of PII, PCI, PHI data early in a log lifecycle become critical and, thereby reducing the risk of compliance violations

- System Health Monitoring & Failure Prediction: Any application or system outage has commercial ramifications. Thereby, it becomes essential to Identify the key parameters contributing to system failure and ensure the warning is raised for potential outages. This use case could be extended to any discrete manufacturing plant, where it becomes crucial to have an effective maintenance strategy, thereby improving the overall equipment availability and reducing downtime. The PLCs log messages can be utilized for analysis.

- Network Management: In the telecommunication industry, data collected from different devices and elements all around the network can be efficiently monitored and analyzed to make real-time decisions. This can help detect problems before customers notice them, resulting in higher customer satisfaction and the opportunity to up-sell to higher devices by understanding the usage patterns.

- Security Monitoring: Post pandemic, cybercrimes are on the rise. All organizations need to keep realigning the existing security monitoring processes. By leveraging unsupervised machine learning algorithms, log analytics can become an effective security monitoring tool. This will help detect security breaches, event reconstruction, and faster recovery.

Conclusion

Multiple anomaly detection methods are available, but identifying anomalies requires proper definitions from data analysts. Various companies have been coming up with many anomaly detection tools, but the issue is the approach they showcase would only be suitable for that particular dataset and use case. Techniques in one field most likely cannot be utilized in another, and instead, they would be infective. Hence, it is essential to narrow down the problem and test the approach using existing benchmarks or datasets (if available) before introducing it to the production.

At LatentView Analytics, our data science experts seize the opportunity to make sense of big data and turn them into insights for our clients to make data-driven business decisions. Get in touch with us at marketing@latentview.com to know more.