A recent Gartner survey found that 73% of companies have invested or will invest in Big Data in the next 24 months. But 60% of them will fail to go beyond the pilot stage. These companies will be unable to demonstrate business value.

At the same time, of those who have already invested, 33% have reached a stage where they have started to gain a competitive advantage from their Big Data. There is a huge chasm between deployment and demonstrating ROI and most companies are diving deep into it.

Much of the success of a Big Data strategy lies in the Data Architecture.

Its no longer adequate to collect data just for internal compliance. Data requirements are changing from pure procedural data (from ERP systems, say for example) to data for profit, the kind that can lead to significant business Insights.

This requires that things be done differently. To begin with, a Big Data Goal needs to be identified.

What does the organization hope to achieve from Big Data?

According to John D*, a senior data scientist at a Fortune 100 technology company, “companies are often tempted to ask questions just based on the data that’s available. Instead, they need to understand and frame their business strategy questions first—and then gather the data and perform the analysis that answers it.”

“Companies need to understand and frame the business strategy questions first—then gather the data and perform the analysis that answers it. It is often tempting to ask questions just based on the data that’s available”

The company he represents uses large scale datasets to understand its customers better, so as to delight them with their products and services.

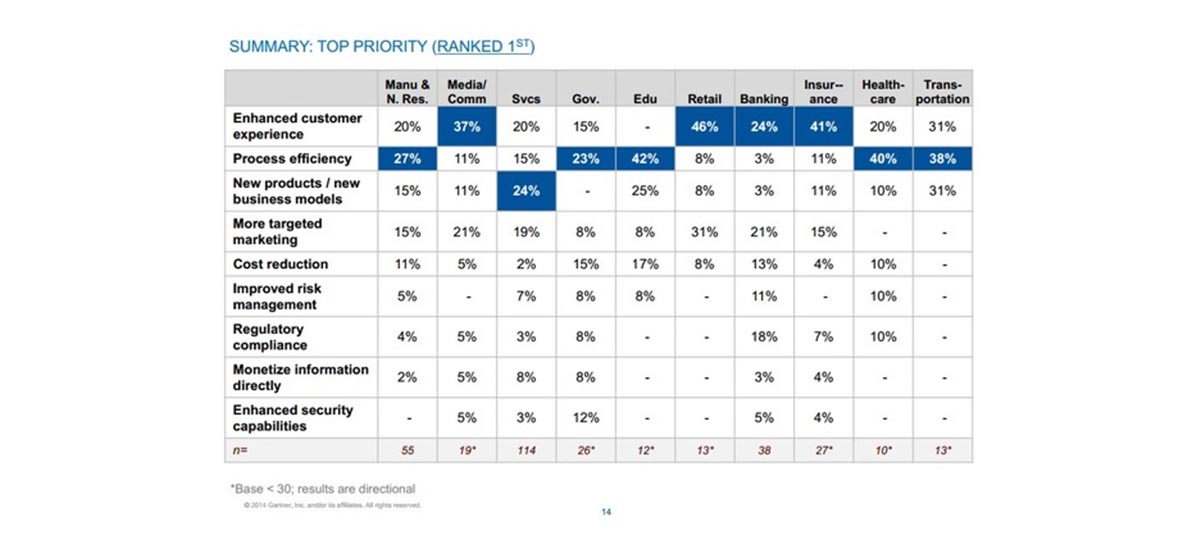

‘Enhanced Customer Experience’ is the prime reason companies turn to Big Data Analytics. A study done by Gartner found it to be the Number 1 reason, followed by ‘Process Efficiency’.

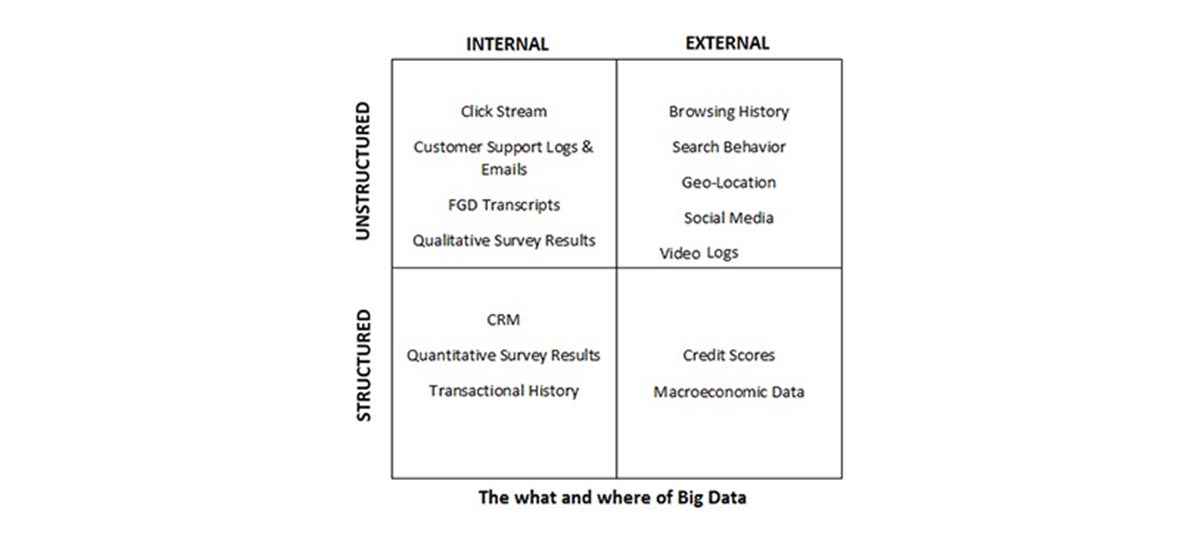

Both these require a blending of internal data with external sources. For enhanced consumer experience, for example, companies should be looking at geo-location data, transaction history, CRM data, credit scores, customer service logs and html data. For process efficiency, let’s say fraud detection for example, companies should be using transactional history, CRM data, credit scores, browsing history.

Once you start blending data sources, new challenges arise. First is the obvious challenge of increased amounts of data that needs to now be stored and cleaned. Second, a lot of the data is now unstructured and you need to be able to convert it into structured data, in order to analyze it and derive Insights. And because of the velocity of data being generated, you need to be able to do the conversion near real-time. Finally, we are no longer talking about just textual or numerical data but also videos.